Image Generation#

This example will show you how to use GPTCache and OpenAI to implement image generation, i.e. to generate relevant images based on text descriptions. Where the OpenAI model will be used to generate the images, and GPTCache will cache the generated images so that the next time the same or similar text description is requested, it can be returned directly from the cache, which can improve efficiency and reduce costs.

This bootcamp is divided into three parts: how to initialize gptcache, running the openai model to generate images, and finally showing how to start the service with gradio. You can also try this example on Google Colab.

Initialize the gptcache#

Please install gptcache first, then we can initialize the cache. There are two ways to initialize the cache, the first is to use the map cache (exact match cache) and the second is to use the database cache (similar search cache), it is more recommended to use the second one, but you have to install the related requirements.

Before running the example, make sure the OPENAI_API_KEY environment variable is set by executing echo $OPENAI_API_KEY. If it is not already set, it can be set by using export OPENAI_API_KEY=YOUR_API_KEY on Unix/Linux/MacOS systems or set OPENAI_API_KEY=YOUR_API_KEY on Windows systems.

1. Init for exact match cache#

cache.init is used to initialize gptcache, the default is to use map to search for cached data, pre_embedding_func is used to pre-process the data inserted into the cache, more configuration refer to initialize Cache.

# from gptcache import cache

# from gptcache.adapter import openai

# from gptcache.processor.pre import get_prompt

# cache.init(pre_embedding_func=get_prompt)

# cache.set_openai_key()

2. Init for similar match cache#

When initializing gptcahe, the following four parameters are configured:

pre_embedding_func: pre-processing before extracting feature vectorsembedding_func: the method to extract the text feature vectordata_manager: DataManager for cache managementsimilarity_evaluation: the evaluation method after the cache hit

The data_manager is used to store text, feature vector, and image object data, in the example, it takes Milvus (please make sure it is started), you can also configure other vector storage, refer to VectorBase API. Also you can set ObjectBase to configure which method to use to save the generated image, this example will be stored locally, you can also set it to S3 storage.

from gptcache import cache

from gptcache.adapter import openai

from gptcache.processor.pre import get_prompt

from gptcache.embedding import Onnx

from gptcache.similarity_evaluation.distance import SearchDistanceEvaluation

from gptcache.manager import get_data_manager, CacheBase, VectorBase, ObjectBase

onnx = Onnx()

cache_base = CacheBase('sqlite')

vector_base = VectorBase('milvus', host='localhost', port='19530', dimension=onnx.dimension)

object_base = ObjectBase('local', path='./images')

data_manager = get_data_manager(cache_base, vector_base, object_base)

cache.init(

pre_embedding_func=get_prompt,

embedding_func=onnx.to_embeddings,

data_manager=data_manager,

similarity_evaluation=SearchDistanceEvaluation(),

)

cache.set_openai_key()

Run openai image generation#

Then run openai.Image.create to generate the image. The generated images can have a size of “256x256”, “512x512”, or “1024x1024” pixels, and smaller sizes are faster to generate.

Note that openai here is imported from gptcache.adapter.openai, which can be used to cache with gptcache at request time.

response = openai.Image.create(

prompt="a white siamese cat",

n=1,

size="256x256"

)

image_url = response['data'][0]['url']

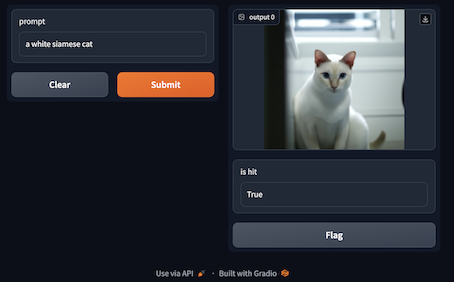

Start with gradio#

Finally, we can start a gradio application for image generation.

First define the image_generation method, which is used to generate an image based on the input text and also return whether the cache hit or not. Then start the service with gradio, as shown below:

def image_generation(prompt):

response = openai.Image.create(

prompt=prompt,

n=1,

size="256x256"

)

return response['data'][0]['url'], response.get("gptcache", False)

import gradio

interface = gradio.Interface(image_generation,

gradio.Textbox(lines=1, placeholder="Description Here..."),

[gradio.Image(shape=(200, 200)), gradio.Textbox(label="is hit")]

)

interface.launch(inline=True)